Sam The Cooking Guy Sentiment Analysis ¶

Objective¶

Sam the Cooking Guy is a cooking YouTube channel which I enjoy watching. Interested in doing some sentiment analysis, I decided I would analyze the comments section of some videos and see how positive/negative the responses are from users who comment.

About The Data¶

All of the comment and video data was acquired by making requests to the YouTube Data API and then storing the data into a MS SQL Server database.

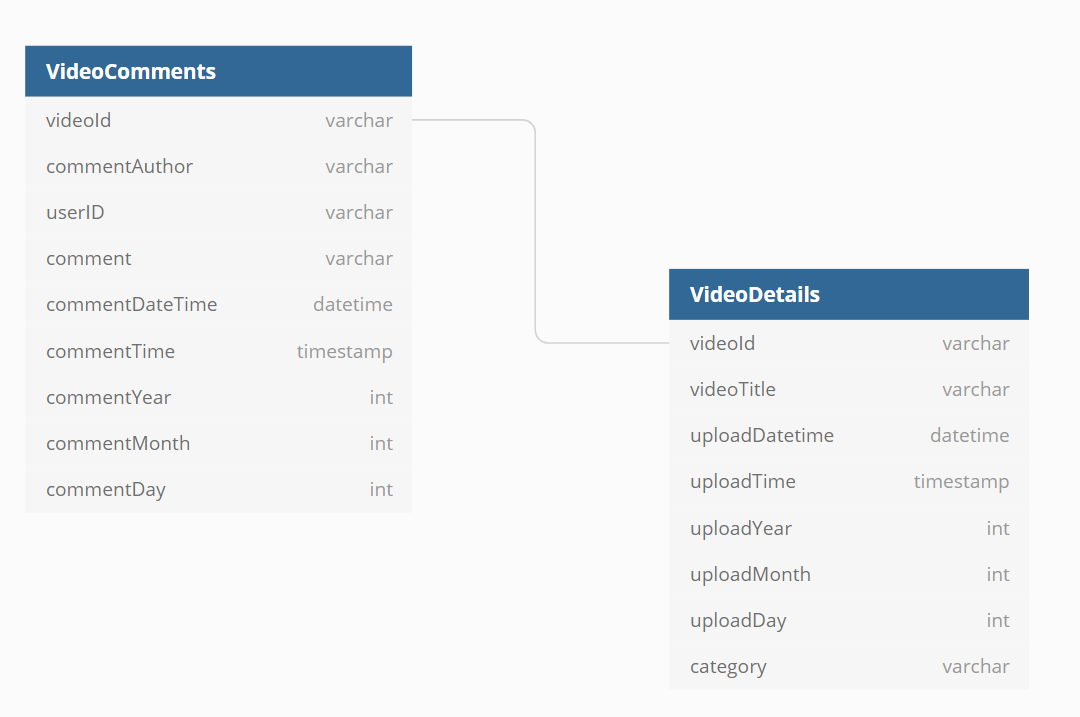

The database contains a simple schema with two tables related on the Video's ID as seen below:

All of the code for this project can be found at this github repository. Check out some of my other work here.

Analysis¶

To begin the analysis, I will start with importing the libraries I'm going to use. I'm choosing to analyze just the burger videos from the database for right now so I also wrote a simple query to fetch the data for only those videos.

import pyodbc

import pandas as pd

from textblob import TextBlob

from wordcloud import WordCloud

from nltk.corpus import stopwords

from nltk.tokenize import word_tokenize

import numpy as np

import matplotlib.pyplot as plt

import re

import os

from os import path

from PIL import Image

import seaborn as sns

##connecting to database and querying data

server = 'DESKTOP-H5MDRS4\SQLEXPRESS'

database = 'youtube_db'

cnxn = pyodbc.connect('DRIVER={ODBC Driver 17 for SQL Server}; \

SERVER=' + server + '; \

DATABASE=' + database +'; \

Trusted_Connection=yes;')

query = '''

SELECT *

FROM VideoComments c

INNER JOIN VideoDetails d ON c.videoID = d.videoID

WHERE d.category = 'Burger'

'''

df = pd.read_sql(query,cnxn)

cnxn.close()

Taking a quick look at the data and looking at some high level descriptors.

Word Cloud¶

After some text cleaning, I created a word cloud to represent the most common words among the comments. It looks like some of the most common words are burger, sam, and video which aren't too surprising since these are most relevant to the videos.

There are some other words that do stand out which could be a good indicator toward more positive sentiment, for example: love, good, and awesome.

Comparing Polarity¶

The library, textblob, provides polarity for text on a scale from -1.0 to 1.0. Polarity is the sentiment measurement of a statement with -1 being most negative in connation, 1 being most positive in connotation, and 0 being a neutral statement.

I was interested in seeing how the sentiment varies between the two videos. The two count plots show both videos have comments which are generally positive or neutral, although the 20 LB Burger video has a much higher comment count.

After looking at the sentiment counts, I was curious to see how each video's sentiment varied over time. To observe this change over time, I grouped the polarity of the comments by day and hour they were posted and then took the average of the grouped polarities.

The average polarity of comments for both videos is largely positive although each video has their spikes of negativity. In addition, the frequency of comments is much higher for the 20 LB Burger video than the Beer Can Burger video but this can also be due to the 20 LB Burger video being uploaded longer.

Comparing Subjectivity¶

Textblob also provides subjectivity for text on a scale from 0.0 to 1.0, where 0 is a factual statement and 1 is a statement based on opinion and emotion.

This might not give too much insight for youtube comments since I suspect most comments are made with subjectivity, for example: "I liked/disliked the video" or "the burger looks good/bad". Still I am interested in looking at how subjective the comments are.

Much to my surprise, the distributions show a large amount of comments to be in the objective-lower subjective range.

After a little more investigating, 41% of the comments for the 20 LB Burger video are between 0 and 0.1 subjectivity while 38% of the comments for the Beer Can Burger are between 0 and 0.1 subjectivity. Although both of the videos' comments are majority subjective, the percentages of low subjectivity are much higher than my initial expectations.

For both videos, subjectivity versus polarity is as expected. As subjectivity for the text increases, polarity moves further away from the neutral point. This makes sense since a comment which is perceived as more positive or negative is more likely to have language conveying emotion/opinion.

Repeat Commentors¶

One last point of interest for me is to look at users who have multiple comments and observe the sentiment of these users.

First I wanted to look at the actual number of users with multiple comments.

Of the total individuals who posted a comment, only about 6.6% actually made more than one comment. However, of the users who made multiple comments, about 46% chose to comment on both videos.

The distributions of polarity among the repeat commentors are all pretty similar with a large amount of comments hovering around neutral to low positivity.

The distributions of subjectivity are also similar, it appears a lot of comments are low in subjectivity. This would make sense since the earlier plot of Subjectivity vs. Polarity revealed text closer to 0 in subjectivity tends to be closer to neutral in polarity.

Finally, to look at the average polarity of the users who did post multiple comments.

It appears average polarity of repeat commentors is similar to the overall commenting, generally positive with some small spikes of negativity.

One interesting thing to note is the frequency of commenting for users who commented on both videos. There is a lot of activity going on from these users shortly after each video is uploaded. This could indicate that users who comment on multiple videos are also likely to watch them shortly after their upload.

Conclusion¶

Overall the sentiment of users who commented on these videos is generally positive which means that it's likely the users who chose to comment wanted to express their enjoyment of the video or the food that was made.

In the future, I can collect comments for more burger videos to see if the sentiment changes with more comments to analyze. I can also query other categories, such as chicken, to compare the sentiment by categories.